If Heroku were a person, it’d be that genius friend who’s always five steps ahead, casually solving complex problems while the rest of us are still figuring out where to start. Sure, you’ve heard about Heroku, maybe you even know what it does (kind of) and have heard about Heroku Application Development.

But do you really know why it’s the real deal?

Let’s dive into why Heroku application development is the secret sauce your tech company has been looking for, all with a dash of humor, and some solid examples.

What Makes Heroku Application Development Useful?

Let’s address the elephant in the room: Yes, Heroku is a Platform as a Service (PaaS). But if that phrase makes your eyes glaze over, here’s the TL;DR: Heroku takes your application code and makes it production ready with fewer headaches than a well-written JavaScript function.

Think of it as the IKEA of cloud application deployment. Sure, you could hand-build your app’s infrastructure in AWS or Google Cloud. But do you really want to? Heroku Application Development gives you the flat-pack furniture equivalent: prem assembled, efficient and extremely good looking.

Case Study: Slack

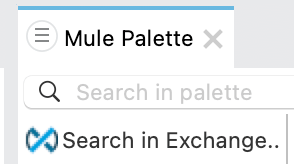

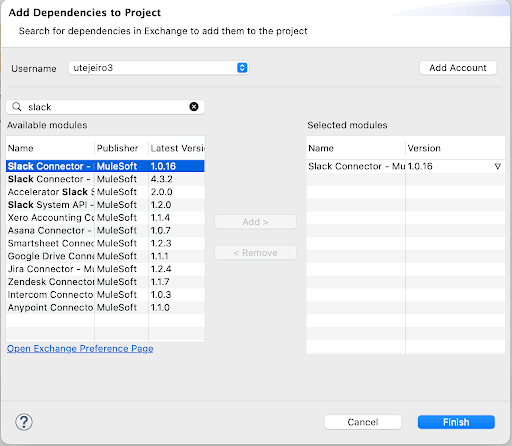

You know Slack, the thing you spend 90% of your workday on? It started on Heroku. In its early days, Slack relied on Heroku’s simplicity and scalability to focus on perfecting its app, not wrangling servers.

By the time Slack became the workplace sensation it is today, Heroku had done its job—letting them scale and migrate seamlessly when the time came.

Why Developers Actually Love Heroku (Yes, Love)

Developers don’t typically gush about platforms. They usually have a thing or two they would change. Yet Heroku has managed to inspire affection and borderline devotion among its users. Why? Because it takes care of the grunt work so you can focus on building things people care about.

1. Deployment: One Fast Push, and You’re Done with Heroku Application Development

Heroku’s deployment process is so simple, it almost feels wrong. Push your code to a Git repository, and Heroku does the rest. No messing with configurations. No late-night calls to your DevOps team. Just push and watch the magic happen.

Example: A FinTech Startup’s MVP

Picture this: A fledgling FinTech startup wants to launch a minimum viable product (MVP) fast. They could spend weeks setting up AWS or Azure, or they could deploy on Heroku in a single afternoon.

They go with Heroku, spend the time saved on refining their app’s features and attract their first big investor. Efficiency pays off, literally.

2. Add-Ons: Your App’s Swiss Army Knife

Heroku’s add ons marketplace is the tech equivalent of a candy store, offering integrations for everything from databases (PostgreSQL, anyone?) to analytics tools. Need Redis for caching? Done. Want New Relic for performance monitoring? Easy.

Example: An E-Commerce Platform

An e-commerce startup on Heroku uses the ClearDB add-on for MySQL and the Papertrail add-on for log management. They track performance with New Relic and send real-time updates via a Twilio integration. In less than a week, they’ve built a fully functional platform with all the bells and whistles—no backend panic attacks required.

Scaling: Heroku Doesn’t Break a Sweat

Ah, scalability. The Achilles’ heel of many promising apps. Heroku handles scaling with something it calls “dynos.” Don’t let the name scare you; it’s basically a fancy term for virtualized containers. Need more capacity? Just spin up more dynos. It’s so smooth, you might forget scaling was supposed to be stressful.

Case Study: The Election Data Tracker

During the 2020 U.S. election, a data visualization app was needed to handle massive traffic spikes as millions of people checked real-time results. Hosting on Heroku allowed them to scale dynamically, adding dynos on the fly without crashing under the load. Try pulling that off with a homemade server setup.

But Is Heroku Too Simple for Serious Tech?

Critics sometimes claim Heroku is “just for startups” or “too expensive for large-scale use.” Let’s unpack that.

Sure, Heroku isn’t designed for managing 100,000 microservices in a hyperscale environment (looking at you, Kubernetes). But for 99% of applications, its simplicity saves time and money in the long run.

Example: SaaS Company Migration

A mid-sized SaaS company ran their app on Heroku for five years, during which they grew from 10 to 200 employees. When they outgrew Heroku’s capacity, the transition to AWS was straightforward — thanks to the groundwork Heroku had laid. No regrets, just growth.

Who Should Use Heroku?

1. Startups

When it comes to rapid deployment and prototyping, Heroku is a no-brainer. Its user-friendly platform allows you to quickly build, test and deploy applications with minimal setup and configuration, making it ideal for projects that require fast iteration and quick go-to-market timelines.

2. Small to Mid-Sized Teams:

If you don’t have a dedicated DevOps team (or if your current team is perpetually swamped with tasks), Heroku is the perfect solution to keep things moving smoothly. Its powerful platform simplifies deployment, monitoring, and scaling, allowing your team to focus on development rather than infrastructure management.

3. Enterprise Experiments:

Big companies can leverage Heroku for a variety of purposes, from side projects and proof-of-concepts to internal tools. Heroku’s flexibility and ease of use make it an ideal platform for quickly bringing ideas to life without the complexity of managing infrastructure.

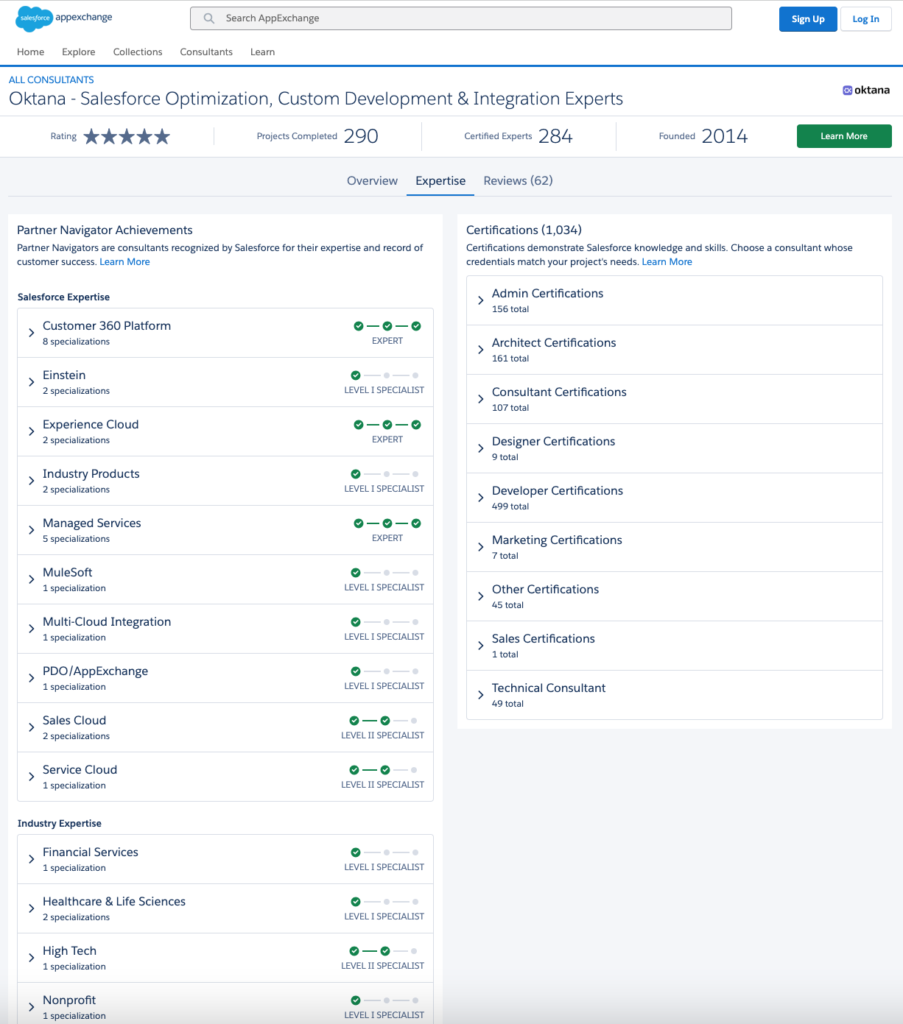

But Wait, What About Oktana?

If you’re sold on Heroku’s application development potential but wondering how to wield it like a pro, that’s where Oktana comes in. Whether you’re deploying your first app or optimizing a complex architecture, we bring the expertise to make Heroku work for your specific needs.

We’ve partnered with tech companies across industries to build and scale apps using Heroku. From crafting tailored solutions to ensuring smooth migrations, our team takes the platform’s power and makes it your competitive advantage.

Ready to see what Heroku can really do? Check out Oktana’s Heroku application development expertise.