It’s time to rethink AI Agents in your business strategy. The conversation around AI agents is shifting—and fast. The current resistance won’t last. As enterprise organizations explore how AI fits into their operations, one thing is becoming increasingly clear: it’s no longer a question of if AI agents will be part of your IT ecosystem, but when. And more importantly, how.

AI Agents represent a strategic transformation in how businesses approach productivity, scalability, and data security. These can reason, learn from interactions, and execute complex workflows with minimal supervision. In practical terms, that means fewer routine tasks for human teams, faster response times, and smarter automation.

The Data Behind the Movement

Data trends couldn’t be clearer – here is some data from the latest Gartner report:

- By 2026, over 20% of enterprises will have employees actively collaborating with AI agents.

- By 2028, 15% of daily business decisions will be made autonomously.

- By 2030, AI is projected to handle 80% of all customer interactions.

Three Pillars Driving the Decision

If you’re in a leadership role, these shifts should be flashing red on your radar. Because when adoption reaches a tipping point, lagging behind will mean more than just operational inefficiencies—it’ll mean losing ground in competitiveness and innovation.

1. Sales ROI & Operational Optimization

According to the Agentforce ROI Calculator, companies see:

- 25% reduction in time spent on manual outreach.

- 30% cut in support costs, as projected by Gartner.

- 20–30% increase in CSAT (Customer Satisfaction) scores.

2. Rapid Payback in Weeks, Not Months

Time to value is critical. With AI agents like Agentforce, impact is immediate. Organizations typically report a 20–30% increase in CSAT (Customer Satisfaction) scores as they deliver faster, smarter responses. This isn’t a long-haul investment—it’s a short-term payoff with long-term benefits.

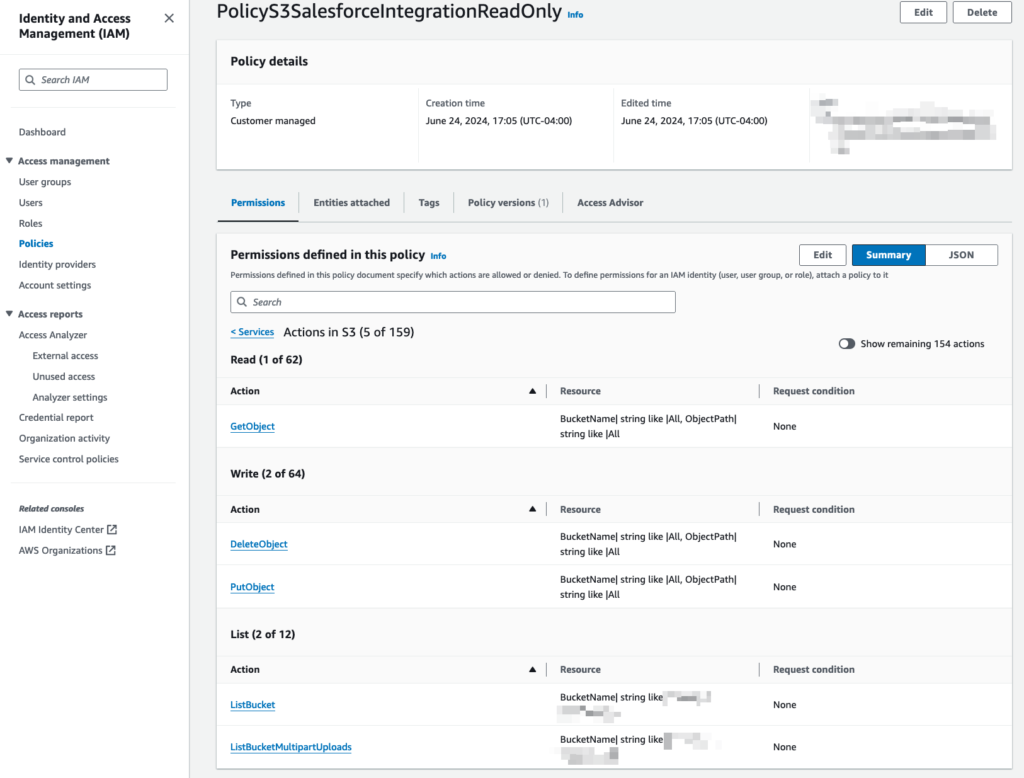

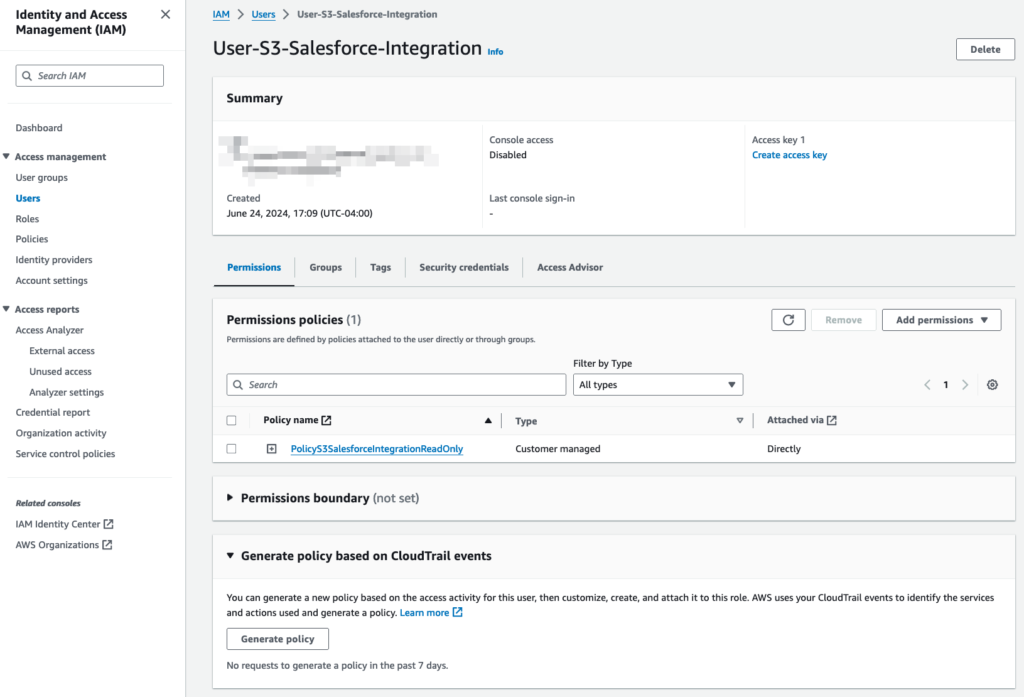

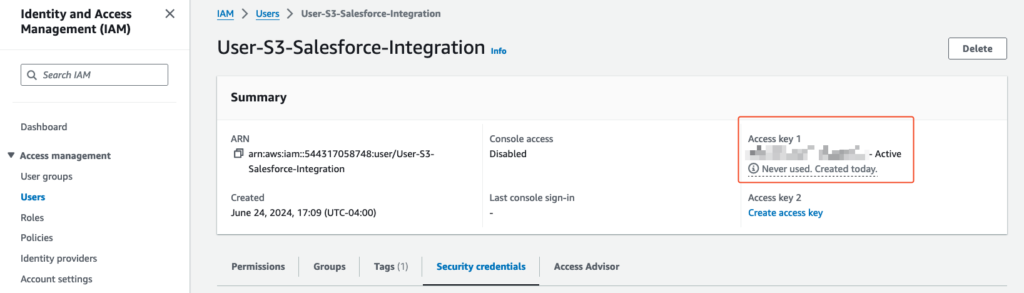

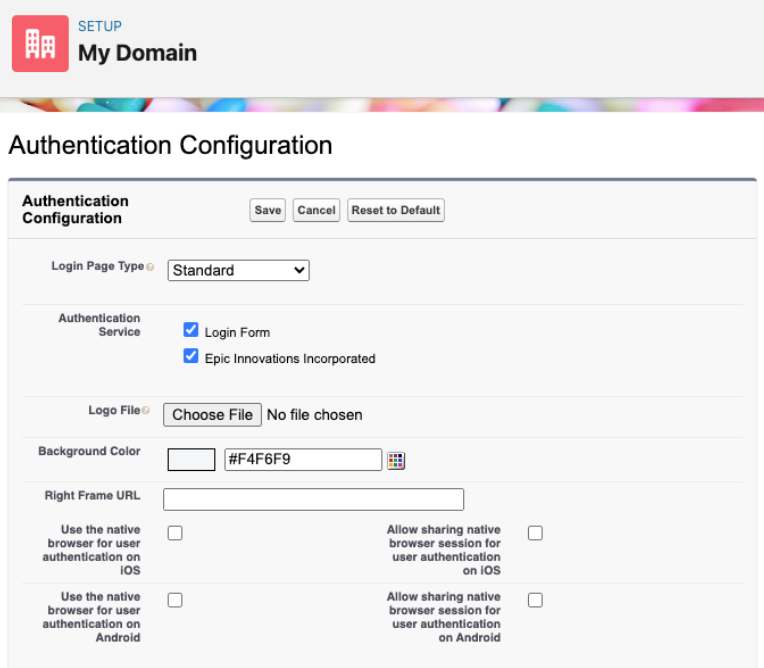

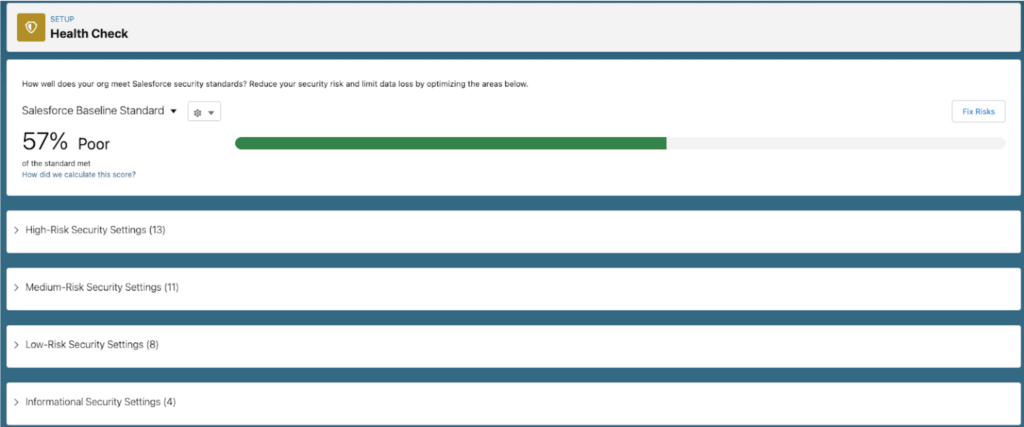

3. Security Matters—Data Privacy Is Non-Negotiable

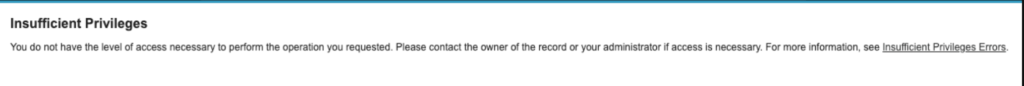

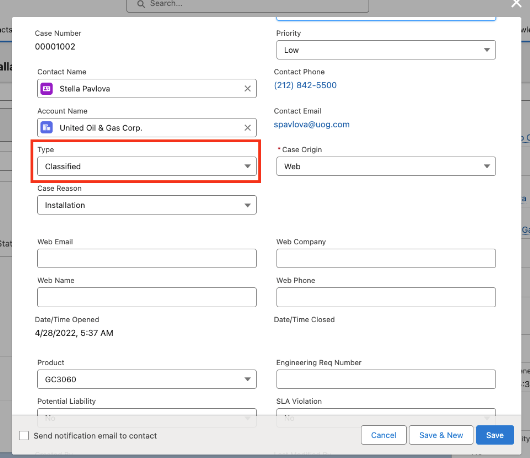

In an era of increasing scrutiny over data practices, security is not negotiable. With Agentforce running inside Salesforce’s Einstein Trust Layer, your data stays governed by the same permissions, sharing rules, and compliance standards you already trust. And with over 40 certifications, including HIPAA and FedRAMP, your AI implementation meets the highest security benchmarks—without additional integration headaches.

Interact with our Demo

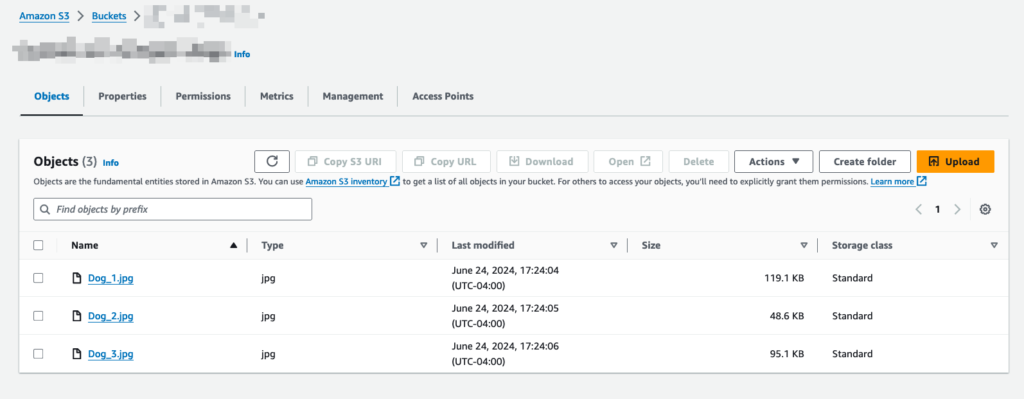

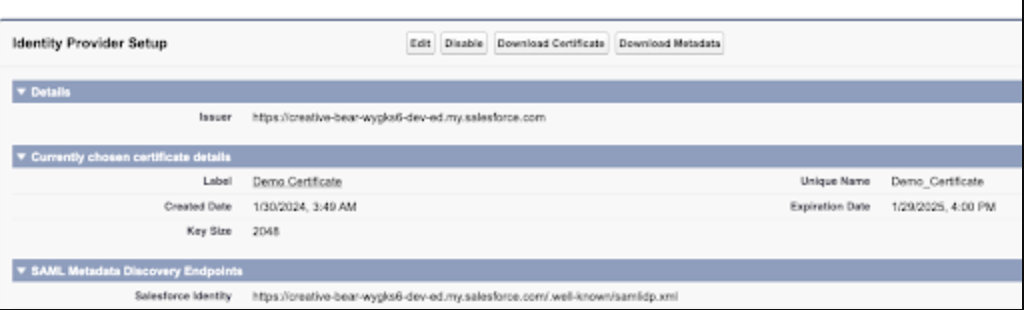

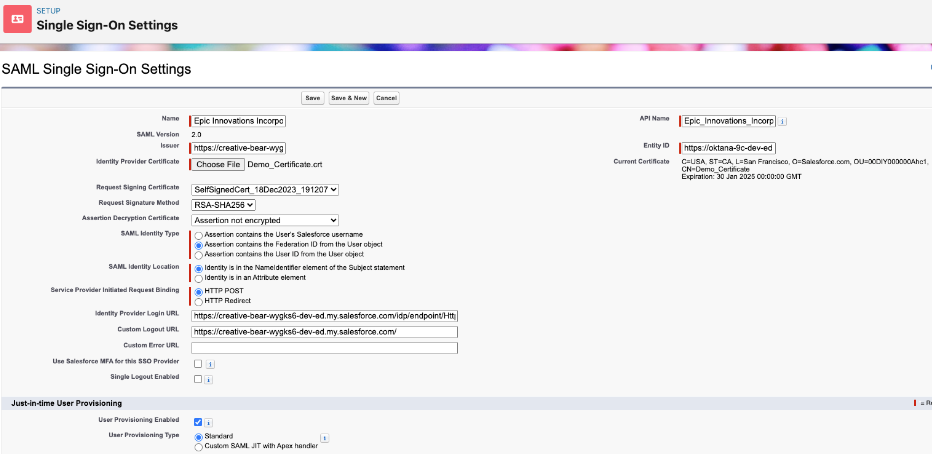

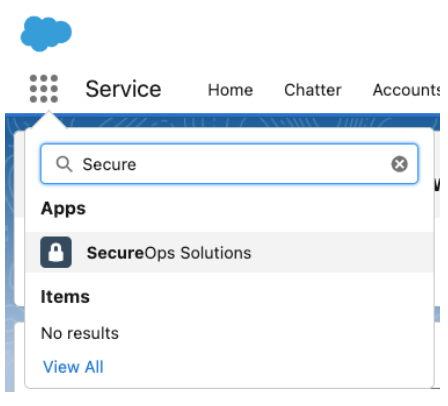

Despite being low-code and natively integrated into Salesforce, deploying AI agents still requires strategy. You need to ensure prompt quality, integrate with legacy systems, and optimize for your unique data structures.

We’ve helped customers build and launch AI agents across sales, support, and internal operations. Whether it’s syncing workflows in Slack, calibrating prompts in multilingual support portals, or integrating with Salesforce Flows and Apex, we bring real implementation experience to the table.

Our approach goes beyond tech. We focus on alignment—with your data, your teams, and your business goals.

Let’s talk! Contact us to book a free 30-minute assessment and explore how Agentforce can reshape your business operations.