Every business depends on data, but most don’t have control over how it moves. Different tools collect it. Different teams handle it. Every department builds their own workarounds to fill the gaps. Over time, the entire system becomes fragile—slow to update, hard to trust, and nearly impossible to scale.

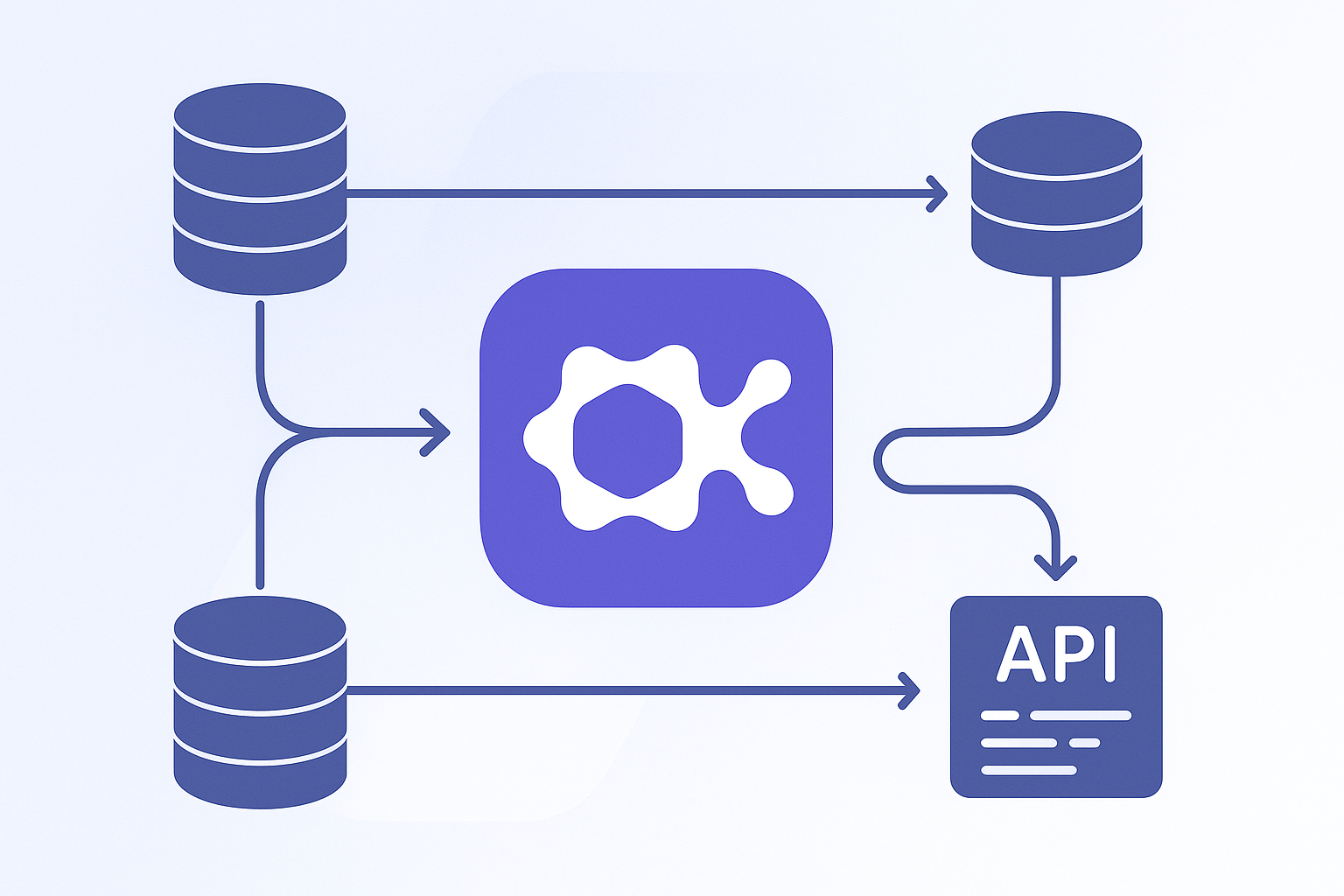

The core problem isn’t storage or access. It’s the lack of a clear, consistent flow. A good data flow service solves this directly by doing four things well:

Collect: Pull structured and unstructured data from every source—product analytics, CRMs, financial tools, third-party APIs—without duplication or delay.

Clean: Standardize and validate records as they move. Fix mismatches, remove duplicates, and ensure accuracy before anything hits your reports.

Organize: Structure the data around your actual business logic—accounts, users, campaigns, transactions—so it makes sense to every team that uses it.

Access: Surface real-time insights in the platforms your teams already use, from dashboards to automated alerts to operational systems.

Without this kind of architecture in place, companies spend more time reconciling data than acting on it. With it, every team can make decisions based on the same information—fast, accurate, and aligned.

How to Strengthen Data Architecture as Your Organization Grows

The early stage approach to data flow is usually tactical. Small teams build what they need with what they have. They pull reports, automate exports, sync records through middleware, or write lightweight ETL jobs to meet immediate goals.

This works for a while. But each step adds a layer of fragility. Here’s how those layers build up.

Uncontrolled replication

Most systems deal with integration by copying data between them. The idea is that each platform needs its own complete version of the data. In practice, this creates a sync problem. When the data changes in one place, it has to be updated everywhere. This introduces lag. It adds risk. And in many cases, it duplicates storage unnecessarily.

Timing mismatches

Batch pipelines, scheduled jobs, and export-based processes often run in disconnected windows. As the system grows, so does the timing gap between event and visibility. If a fraud event occurs in the afternoon and your ETL process runs at night, your team will act too late, every time.

Fragmented logic

As soon as business rules exist in multiple systems, they drift. One platform flags a risk score using one set of thresholds. Another team builds a report based on different ones. There’s no clear source of truth. Teams act on different interpretations of the same data.

Lack of shared ownership

Data lives across departments. Marketing has one stack. Sales uses another. Analytics depends on both but owns neither. No one person or team is responsible for the whole flow. That makes failure hard to detect and harder to solve.

These issues aren’t the result of poor execution. They are the natural outcome of scaling without a clear architectural strategy.

The Cost of Waiting to Address It

Most companies have to live with these inefficiencies until they affect accuracy, or business efficiency . That moment usually comes when:

- A critical report takes days to produce

- The board asks for numbers that don’t reconcile

- A fraud case goes undetected due to processing lag

- A compliance audit exposes inconsistent access or logic

At that point, the system is full of invisible dependencies. The system needs to be restructured with clear logic, observable pipelines, and timing that matches the business.

This is the kind of work that most teams recognize as necessary but never have time to do. That’s where we come in.

What Oktana Builds and Why It Works

We build data flow systems that support decision-making at speed. That means systems where the right data reaches the right platform with minimal delay, no duplication, and clear logic at every step.

Our work begins with a discovery session where we find out::

- Where is the true source of each type of data?

- How many times is that data moved?

- Where is logic applied, and by whom?

- Who relies on which output, and when?

- What parts of the pipeline break most often, and why?

Once we have that map, we design a pipeline that reflects what the organization actually needs.

Our core principles:

🔹 Minimize Data Movement

We reduce unnecessary duplication by using native data sharing features instead of copying data across systems. For example, when integrating Salesforce and Snowflake, we implement Zero-ETL pipelines that avoid redundant storage and sync delays. Data moves only when it adds real value.

🔹 Centralize Business Logic

Business logic should exist in one place—and flow everywhere else. We define where logic is applied, ensure consistency across platforms, and prevent fragmented rules from living in dashboards, reports, and automation scripts. This eliminates misaligned decisions and makes changes easier to manage.

🔹 Make Pipelines Observable

Every pipeline we build is designed to be visible, monitored, and explainable. We provide delay tracking, error logs, throughput metrics, and alerting so teams can detect and resolve issues before they impact the business. When something breaks, you’ll know where, when, and why.

🔹 Design for Action, Not Just Analysis

Data should trigger outcomes, not just sit in dashboards. Our systems push insights into the operational tools where decisions happen—CRM, support, compliance, marketing—so teams act in real time, not in retrospectives.

🔹 Build with Governance and Lineage in Mind

We map where data comes from, how it changes, and who touches it. This transparency helps teams trust the data they use, enables audits and compliance, and reduces the risk of hidden dependencies. Every transformation is traceable.

🔹 Secure by Design

Data systems must enforce access controls, data masking, and audit trails from day one. That’s why we design pipelines that respect role based access, handle sensitive data responsibly, and keep security aligned with compliance standards.

A major media company partnered with us was handling millions of user events daily. Their customer data was stored in Salesforce Service Cloud, while behavioral data lived in Snowflake. Their fraud detection process was slow, relying on manual reviews that took days. By the time risks were flagged, users had already been affected and their management teams were playing catch up.

Oktana stepped up to help them rebuild this process from the ground up. The main goal wasn’t to bring in new tools, but to design a system that actually fit how the company operated.

A Real Example: Fixing Fraud Detection for a Global Platform

What We Built

We started by connecting Salesforce Data Cloud to Snowflake using a Zero ETL data share. This allowed Snowflake to access real time data without creating storage copies or adding sync delays. We moved 17 data objects from Salesforce into Snowflake specifically for fraud analytics.

From there, fraud detection models ran directly in Snowflake, analyzing current data instead of relying on overnight batches. The results were sent straight into Salesforce CRM Analytics, where support and compliance teams could respond immediately. Oktana also built a custom integration loop to keep this flow running in real time.

The Results

- The system now processes over four times more data than before, with no performance loss.

- Fraud insights appear 90 percent faster, helping teams take action before users are impacted.

- Snowflake query performance improved by 50 percent, making detection both faster and more precise.

- Manual review steps were removed entirely, cutting delay and human error.

- Fraud logic is now consistent across data, analysis, and frontline tools.

Why It Worked

We focused on designing around the company’s real-world workflows. Data Cloud provided a reliable upstream source. Thanks to how we employed Snowflake, CRM Analytics served as the front line for team response.

The architecture now supports higher volume, faster decision-making, and a unified view of risk across teams.

If you’re struggling with data silos or slow fraud detection, we’d be glad to explore how this kind of approach could work for you.

When to Re-Architect

If your system meets any of the following conditions, it’s likely time to step back and redesign:

- Data must be extracted and cleaned before it’s usable

- Teams produce reports from separate sources and reconcile manually

- The same metric appears with different values depending on where you look

- Key processes rely on human triggers because the data arrives too late

- No one can say with certainty which system owns what data

These are not normal growing pains. They are early warnings that the system can’t scale further without increasing risk and overhead.

What You Get From a Proper Data Flow Design

- Sales, support, and product teams operate on the same logic

- Data changes once, and the change is reflected everywhere it’s used

- Analysts stop cleaning and start interpreting

- Security teams see where sensitive data flows and who touched it

- Business decisions rely on facts, not approximations

This is what architecture enables. Not with more dashboards or better visuals—but with cleaner structure and clearer responsibility.

Let’s Talk if Your System Feels Like It’s Slowing Down

If your data team is spending more time fixing pipelines than learning from data, the architecture is getting in the way. If teams wait on reports, the system is too slow. If logic doesn’t match across platforms, the system is inconsistent.

We help companies fix this—not by selling more tech, but by engineering flows that match how the organization actually works.

We’ll look at what’s built, what’s breaking, and what needs to change.