What is Scalability?

Scalability is a general software concept. It means that a system is built in a way that can be easily grown in regards to usage (example: more people accessing a site) or adding new features to the site. It is the ability the software has to continue to properly function as its context changes.

In Heroku, it refers to the system being able to cope with more users or more traffic.

Heroku Scalability

Heroku provides easy-to-use tools that enable developers to scale dynos (Heroku app containers) instantly to meet demand. After an app is deployed, it may require adjustments in response to things like increased traffic, new functionalities, or business scale. You can scale using the Heroku Dashboard or Heroku CLI.

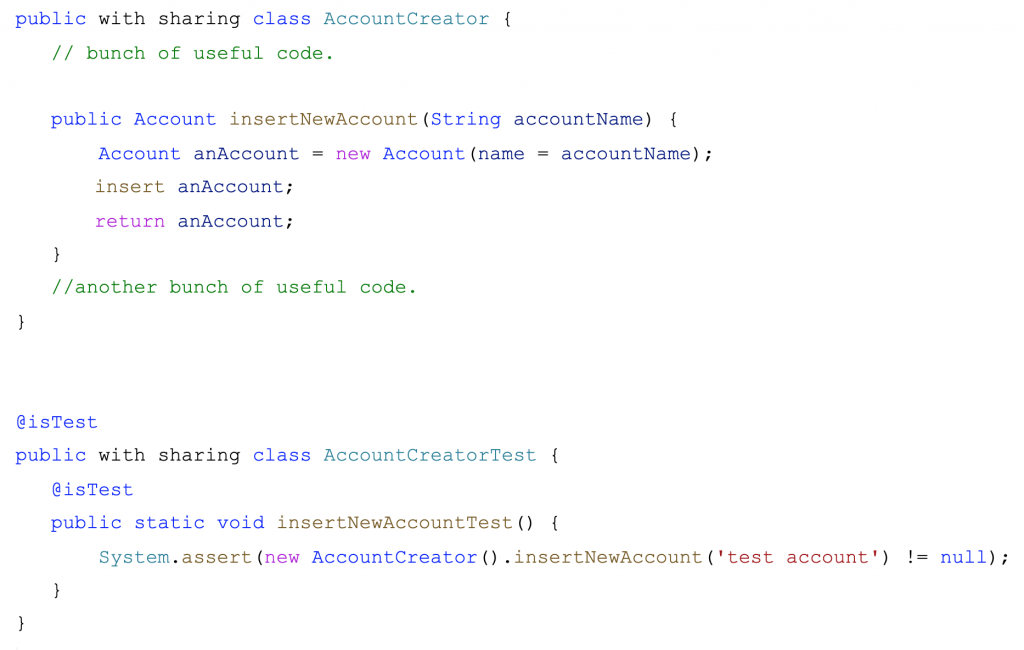

Heroku Dashboard:

The Heroku dashboard is the web user interface for Heroku’s core features and functionality. It enables programmers to manage their apps, add-ons, deployment processes, metrics, and much more. It provides a simple slider interface for scaling dynos and you see the results immediately reflected in your dyno formation.

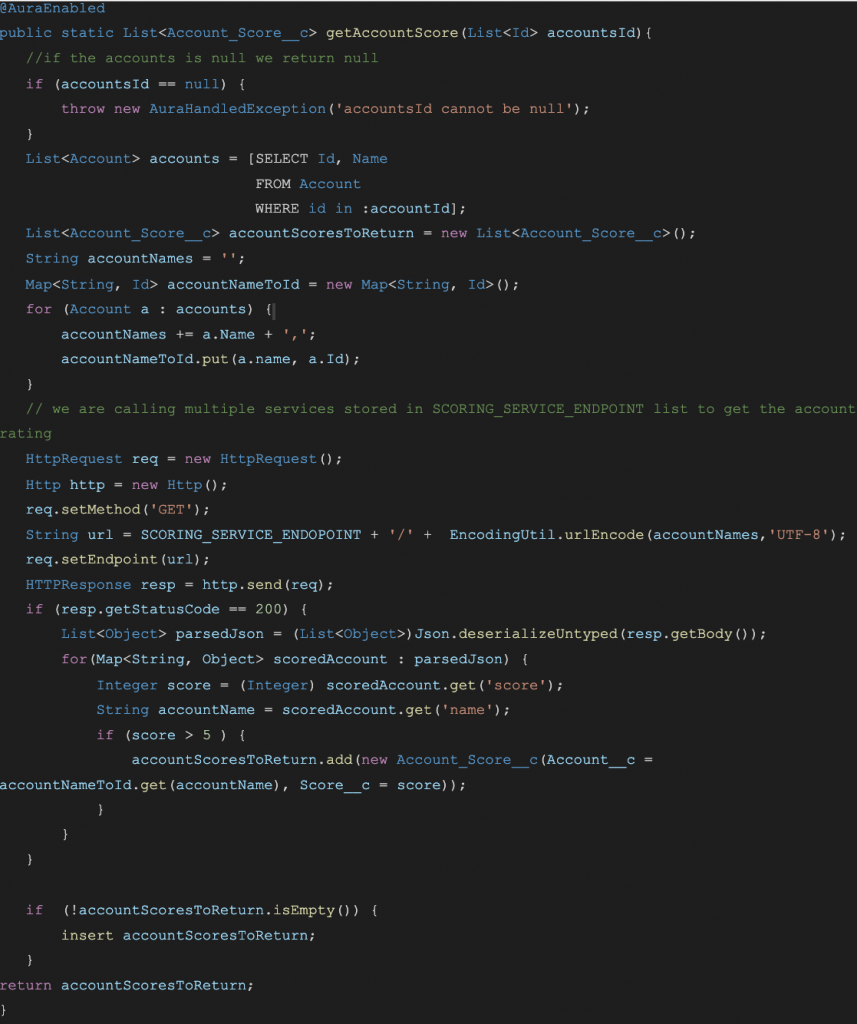

Heroku CLI:

Developers can also oversee their dyno formation using the Heroku command-line interface (CLI). This allows you to create and manage apps from the shell of various operating systems. Through a simple command, you can enlarge the number of web and worker dynos, or change the dyno type of any number of dynos at once.

Scalability Resources

Scalability resources fall into two broad categories: horizontal and vertical. Additionally, Heroku offers a third type called autoscaling.

Scaling horizontally: adding more dynos

Adding more dynos of a given dyno process type scales your application horizontally. For example, adding more web dynos lets Heroku route incoming HTTP requests across more running instances of your web servers, which will typically improve performance for a higher traffic volume.

Also, adding more worker dynos allows your app to process more jobs in parallel and handle a larger volume of jobs. There are some cases where scaling horizontally won’t help, such as bottlenecks on the backend services and long requests or jobs.

Scaling vertically: upgrading to larger dynos

Upgrading dynos to larger dyno types will provide your app with more memory and CPU resources.

All the dynos are isolated. But, apps running on Free, Hobby, and Standard dynos may share an essential compute instance —they are multi-tenant—and consequently may encounter some degree of performance variance. Performance dynos and those that run in Heroku Private Spaces do not share an underlying compute instance with other dynos, so they experience low variability in performance.

Autoscaling

Heroku enables you to automatically increase the number of web dynos needed to meet the specified 95% response time threshold. Based on your app’s existing throughput, the autoscaling feature removes the need to anticipate traffic spikes. Autoscaling is included for free on Performance and Private dynos.

Heroku makes it super easy for developers to scale any number of device integrations individually. Developers can simply provision more dynos to handle increased traffic coming in from a specific device.

If Autoscaling doesn’t cover your needs or is not working as expected for your apps, Heroku recommends trying the Rails Auto Scale add-on or Adept Scale add-on.

Scalability Simplified, with Examples

Horizontal Scalability

If you have a store that serves clients and you expect 10 clients at the time, you will hire 2 employees to provide customer service. Suddenly, your store becomes popular and 100 clients arrive at the same time. Those 2 employees won’t be enough to meet their needs. You will need to scale horizontally, employing more people to serve more clients.

The store works like your app, website, mobile application, system, etc. The site was prepared for 10 users connected at the time working with 2 web dynos. Suddenly, your site has 100 users loading the site at the same time. To scale horizontally in Heroku, you can add more web dynos. The incoming HTTP requests can be linked to more clients arriving at the store, waiting to be served.

If your store is a restaurant, the people serving the clients would be the waitress. However, restaurants not only can satisfy customer needs only with waitresses; there are also employees working behind the counter in the kitchen. Even if you hire 100 waitresses, if you have only one person cooking, that person won’t be able to cook for 100 people. You will need more people working in the kitchen.

Kitchen employees are the worker dynos in Heroku, they are in charge of doing background jobs. If you have more, they can cook in parallel. The same happens with worker dynos.

Vertical Scalability

Horizontal scalability might not always be enough. Continuing with the restaurant example, if the restaurant premises are too small, even if you hire 100 waitresses and 10 people in the kitchen, you won’t be able to fit enough tables. You will need to increase your capacity. You will need to scale vertically.

Another example that can be related to Heroku would be if your chef only knows how to prepare juices or if your waitress only speaks one language. Those cooks won’t be able to add more things to your menu and that waitress won’t allow you to serve clients that speak other languages. In this case, the issue won’t be solved by adding more waitresses or cooks, you will need to employ people that have the skills that you need. In this case, you will need to scale vertically.

Autoscaling

So, what would happen if the demand in your restaurant is variable?

It wouldn’t be cost-effective to pay 100 waitresses full-time. However, you will need employees who can be hired with short notice when more people arrive at the restaurant. You will need full-time employees and people you call in emergencies. This is what Heroku offers with its autoscaling system. Developers can set up 10 dynos, and add additional dynos when the response time is slow. When the workload increases, Heroku automatically provides the extra dynos needed to cope with the demand. Once the demand decreases, it automatically disables it, allowing you to save money.

Heroku offers a practical step-by-step guide showing you how to scale your dyno formation easily. If you want to learn more about Heroku, click here, you will find useful information and three ways it enhances cloud infrastructure.